Artificial Intelligence is a vast topic, but I’d like to discuss it from just a couple of angles and see where we go from there. Let’s start with Alan Turing.

(Listen to the Retrospectical Podcast Episode 10: Artificial Intelligence and the Kurzweil Singularity)

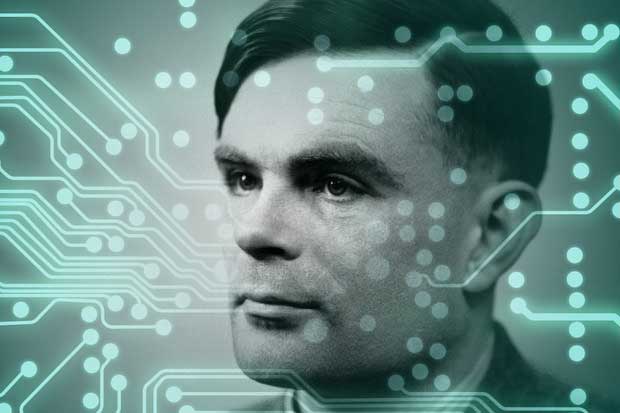

Alan Turing

Now considered to be the father of artificial intelligence, Turing was famously involved with quite a lot of cryptology (specifically cracking codes, not creating them) during World War 2 but had previously studied in and contributed to many other scientific communities as well.

At this time, no one had even come up with the term ‘Artificial Intelligence’. Computers were limited to processing input provided on punch-cards and were still taking up entire buildings and assembled with thousands of vacuum tubes.

Turing thought that – given enough processing power and the inevitable advances of technology - the ability to distinguish between a person and a computer in conversation could become blurred to the point where such a distinction ceased to exist.

The test is based on an old parlour game (“The Imitation Game”, which also happens to be the title of a recently released movie about Alan Turing’s life and death as played by Benedict Cumberbatch that everyone should go see) where a man and a woman are both trying to convince everyone that they are the woman. The woman has to answer honestly, and the man can answer in any way he likes in order to convince the audience that he is, in fact, the woman. Turing’s version of the test puts the computer in the role of the man, trying to convince the audience that it is, in fact, the human.

Although there have been many attempts to pass the Turing test, most have still all failed. Several have recently appeared to either win or comes close to winning (such as the Russian chatbot, Eugene Goostman who successfully got 1 in 3 judges to pick it as a 13-year-old, non-native-speaking Ukrainian boy). Goostman technically won, but it is itself simply a computer program running scripts and playing tricks (like misspelling some words on purpose, etc) in order to fool the judges. Most will argue that no computer has yet passed the Turing test according its original spirit. http://io9.com/a-chatbot-has-passed-the-turing-test-for-the-first-ti-1587834715

Some others working in the field of artificial intelligence submit that the Turing test is inherently flawed and any computer that is able to fool the judge(s) will be doing just that – fooling them with tricks rather than demonstrating actual comprehension and intelligence. These critics have also come up with a series of alternative tests that try to accomplish the same goal without allowing the same loopholes: http://io9.com/8-possible-alternatives-to-the-turing-test-1697983985

Ray Kurzweil

Kurzweil is a mad genius. He’s widely recognized as force of intelligence and innovation (which cannot really be refuted in good faith since he invented flatbed scanners, print-to-speech machines, music synthesizers and helped pioneer optical character recognition in general). He has also – sometimes in the same breath – been called crazy. He has borrowed science fiction terms like the ‘Singularity’ (referring to a point – in the near future, according to Kurzweil, where technological progress grows past the point of human comprehension of that same technology) for use in his books and talks.

This ‘Kurzweilian’ view on the future, as it has come to be known, has been popularized recently by a successful science fiction movie called Her, which was specifically about the Singularity and a highly recommended film. Kurzweil himself even reviewed the film, adding his own input to the film’s ending:

In my view, biological humans will not be outpaced by the AIs because they (we) will enhance themselves (ourselves) with AI. It will not be us versus the machines (whether the machines are enemies or lovers), but rather, we will enhance our own capacity by merging with our intelligent creations. We are doing this already. Even though most of our computers — although not all — are not yet physically inside us, I consider that to be an arbitrary distinction.

They are already slipping into our ears and eyes and some, such as Parkinson’s implants, are already connected into our brains. A kid in Africa with a smartphone has instant access to more knowledge than the President of the United States had just 15 years ago. We have always created and used our technology to extend our reach. We couldn’t reach the fruit at that higher branch a thousand years ago, so we fashioned a tool to extend our physical reach. We have already vastly extended our mental reach, and that is going to continue at an exponential pace.

Excerpted from http://www.kurzweilai.net/a-review-of-her-by-ray-kurzweil

The mind-boggling thing is just how many people out there trust and respect his work and his many predictions. In fact, Kurzweil was recently hired as Google’s Director of Engineering and is directly in charge of all Google’s artificial intelligence efforts. Google has spent billions of dollars in the past few years, picking up companies like Boston Dynamics (develops and manufactures life-like military robots) for an undisclosed sum, Nest Labs (smart thermostats) for 3.2 billion and last year they acquired British artificial intelligence startup DeepMind (who recently demonstrated a computer system that was capable of learning how to play video games from scratch with no prior programming - as opposed to a system like Deep Blue that was designed specifically to play one game - and routinely beat professional human players at those games) and many others. What is currently being assembled via Google acquisitions and the installation of Kurzweil at the helm has been referred to more than a few times as the ‘Manhattan project of AI’.

Kurzweil predictions in the past that were right, and those going forward into the future: http://singularityhub.com/2015/01/26/ray-kurzweils-mind-boggling-predictions-for-the-next-25-years/

But what about Skynet?

The reality is that right now artificial intelligence is limited, but almost everyone in the AI field agrees (with varying time-spans) that eventually computers will surpass us in intelligence (http://www.newyorker.com/online/blogs/elements/2013/10/why-we-should-think-about-the-threat-of-artificial-intelligence.html) and be able to reprogram themselves and other computers to perform any number of tasks including self-replication, resource gathering, etc.

Elon Musk, Bill Gates and Steve Wozniak have all said that artificial intelligence is one of humanity’s biggest existential risks. Hundreds of artificial intelligence experts recently signed a letter put together by the Future of Life Institute that prompted Elon Musk to donate $10 million to the institute. "We recommend expanded research aimed at ensuring that increasingly capable AI systems are robust and beneficial: our A.I. systems must do what we want them to do," the letter read.

But who does “we” refer to? Surely, the idea being put forth here is for humanity to somehow collectively decide the direction going forward – but that is never the case in these kinds of situations. Who decides what sort of ethics will be programmed into or even taught to these new systems of the future? Is consciousness uniquely human (e.g. could something be built that was defined as ‘intelligent’ but didn’t have a consciousness)? What sort of consequences will the development of high level artificial intelligences have on the deeply religious people throughout the world?

Musk once tweeted, "We need to be super careful with AI. Potentially more dangerous than nukes." Stephen Hawking has also spoken up against speeding ahead with AI development without careful consideration: "The development of full artificial intelligence could spell the end of the human race," he said.

As so often happens, the real world may take cues from science fiction before it. Years ago, Isaac Asimov thought up the 3 rules of robotics (rules that he thought should be programmed into the very basic code of any sort of artificial intelligence): (1) A robot may not injure a human being or, through inaction, allow a human being to come to harm. (2) A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law. (3) A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Knowing all of the possibilities, the question becomes: will that impending technology be controllable? Assuming we succeed in creating something that can think, make decisions and act on those decisions will the world end up looking like Kurzweil envisions it to be (a place where death and poverty no longer exist and we merge ourselves with the technology that we have created), or will it be more decidedly dystopian with intelligent war machines that could easily turn on humanity all together?